Amazon has generated a lot of excitement around their recent release of Athena, an ANSI-standard query tool that works with data stored in Amazon S3. Athena and S3 can deliver results quickly and with the power of sophisticated data warehousing systems. This article covers 9 things you should know about Athena when considering it as a query service.

1. Schema and Table Definitions

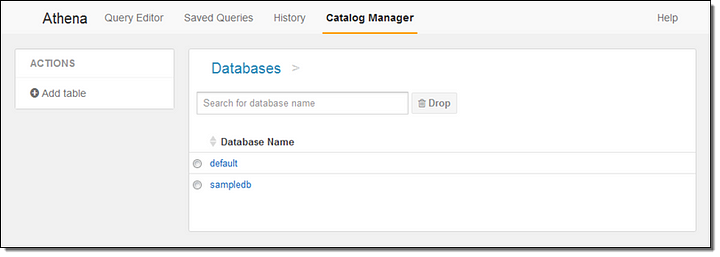

To be able to query data with Athena, you will need to make sure you have data residing on S3. With data on S3 you will need to create a database and tables. When creating schemas for the data on S3 the positional order is important. For example, if you have a source file with “ID”, ”DATE”, ”CAMPAIGNID”, ”RESPONSE”, ”ROI” and ”OFFERID” columns, then your schema should reflect that structure.

“ID”,”DATE”,”CAMPAIGNID”,”RESPONSE”,”ROI”,”OFFERID”“0122”,”December 10, 2015",”123432100A”,”8.43",”A102B”,”4985"“0123”,”December 10, 2015",”543421111A”,”2.61",”A904F”,”3212"

If you do not account for the position, you will end of up something that would not align with expectations.

Once the process is complete, all database and tables are automatically stored within the system and accessible via JDBC.

2. Data Formats

The service works with a number of different data formats. These include ORC, JSON, CSV, and Parquet. Amazon suggests converting data to columnar formats using Apache Parquet. Make sure your team is aware of it. In addition, a compressed and columnar format can lower query and storage costs while helping to further improve performance. Amazon also suggests partitioning data to reduce the amount of data that the query needs to scan. This can boost performance and lower query costs.

3. Speed and Performance

Athena makes it quick and easy to run queries on S3 data without having to set up servers, define clusters, or do any of the housekeeping that similar query systems require. Athena makes use of the computer resources in multiple and separate Availability Zones. Amazon also provides redundant data storage, so the service promises speed, durability, and availability.

Check out the performance tips, and many other points of interest, at the AWS Blog

4. Supported Functions

Athena uses Presto as its SQL query engine. Users can enter ANSI-standard SQL into this tool and interface directly with Amazon S3 data via Athena. This includes standard SQL functions like SELECT and relational operators like JOIN. See the Facebook Presto function documentation for a full list of functions.

At this time, Athena supports only Hive DDL for table or partition creation, modification, and deletion. Here is the full list of SQL and HiveSQLfunctions supported by Athena.

5. Limitations

At this time, Athena still has some limitations. For example, the following are not supported:

- User defined functions and stored procedures

- Hive or Presto transactions

- LZO (Snappy is supported)

In addition, Amazon has imposed some limitations on queries. For instance, Athena only allows users to submit one query at a time and to have five concurrent queries running for each account. Each account is limited to 100 databases, and databases cannot have more than 100 tables. While Athena can access data from a different region than the one that initiated the query, a limited number of regions are supported at this time. This page has a full and updated list of these regions.

6. Integration With Other BI Tools

Amazon promotes this service as a way to produce result sets with SQL queries. However, the data can be used with other business intelligence tools for reporting and analysis. One obvious example is Amazon QuickSight. The services has a JDBC driver that can be used to interface with other business intelligence software.

7. Athena’s Security

Amazon offers three ways to control data access:

- AWS Identity and Access Management policies

- Access Control Lists

- Amazon S3 bucket policies

Users are in control who can access data on S3. It’s possible to fine-tune security to allow different people to see different sets of data and also to grant access to other user’s data.

8. Cost Considerations

Basically, users will pay for the amount of data scanned by queries they run. In addition, the results that get stored in S3 can incur storage charges. This briefly explains pricing:

- Athena charges $5 for each TB of data scanned.

- Queries are rounded up to the nearest MB, with a 10 MB minimum.

- Users pay for stored data at regular S3 rates.

Amazon advises users to use compressed data files, have data in columnar formats, and routinely delete old results sets to keep charges low. Partitioning data in tables can speed up queries and reduce query bills.

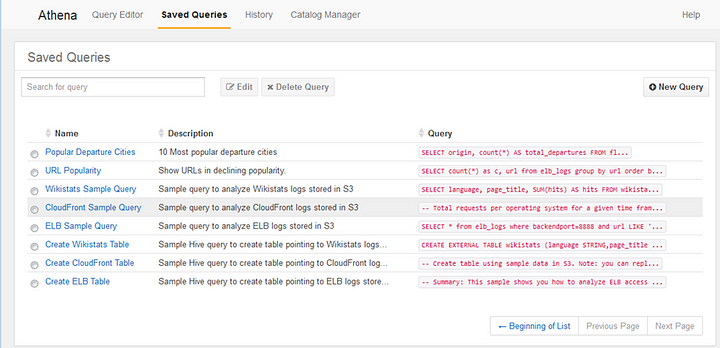

9. Interface

Athena has a simple and easy to understand interface. The menu structure is easy to navigate, and includes four main tabs: Query Editor, Saved Queries, History, and Catalog Manager. As long as you have experience running SQL-queries you will not need any specific training to use the tool.

Should you consider Amazon Athena?

The pay-for-usage pricing model may attract analysts who thought the power of this kind of querying system was out of their budget or required complex systems and DevOps support. The interface is simple to use and should be intuitive for anybody with a basic grasp of SQL. Also, Athena will increasingly integrate with sophisticated BI tools that can produce reports and visualizations.

Companies that use S3 and need a quick but robust query service might find that Athena offers an ideal solution. This is particularly true for businesses that would rather not have to set up their own Presto infrastructure or want the simplicity of using Athena for spot or ah hoc analysis. However, keep in mind that more complex the use cases may require additional expertise to accomplish various implementation details in a pursuit an optimized Athena implementation.

Feel like getting into the weeds? Check out the Athena technical docs. It is perfect reading for a Friday night.

Comments

Post a Comment